“Is stochastic thermodynamics the key to understanding the energy costs of computation?”

The relationship between the thermodynamic and computational properties of physical systems has been a major theoretical interest since at least the 19th century.

It has also become of increasing practical importance over the last half-century as the energetic cost of digital devices has exploded.

Importantly, real-world computers obey multiple physical constraints on how they work, which affects their thermodynamic properties. Moreover, many of these constraints apply to both naturally occurring computers, like brains or Eukaryotic cells, and digital systems.

Most obviously, all such systems must finish their computation quickly, using as few degrees of freedom as possible.

This means that they operate far from thermal equilibrium. Furthermore, many computers, both digital and biological, are modular, hierarchical systems with strong constraints on the connectivity among their subsystems.

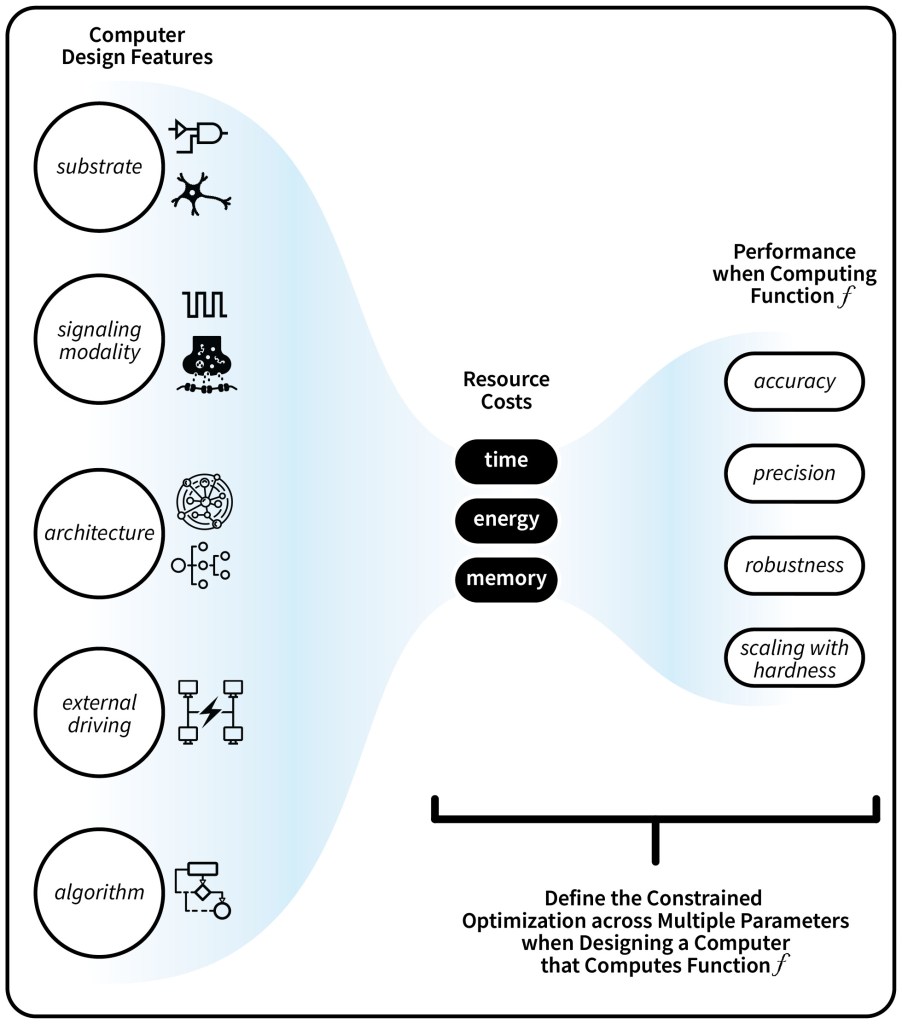

The map between a computer’s design features and performance is shown in the figure.

Yet another example is that to simplify their design, digital computers are required to be periodic processes governed by a global clock. None of these constraints were considered in 20th-century analyses of the thermodynamics of computation. The new field of stochastic thermodynamics provides formal tools for analyzing systems subject to all of these constraints. We argue here that these tools may help us understand at a far deeper level just how the fundamental thermodynamic properties of physical systems are related to the computation they perform.

The energetic cost of computation is a long-standing, deep theoretical concern in fields ranging from statistical physics to computer science and biology. It has also recently become a major topic in the fight to reduce society’s energy costs.

Although CS theory has mostly focused on computational resource costs regarding accuracy, time, and memory consumption, energetic costs are another important cost that has barely been considered in the CS theory community. Until quite recently, most of the research regarding the thermodynamics of computation has focused either on systems in equilibrium or archetypal examples of small systems, including only basic operations such as bit erasure.

In this paper, we argue that the recent results of stochastic thermodynamics can provide a mathematical framework for quantifying the energetic costs of realistic (both artificial and biological) computational devices. This may provide major benefits for the design of future artificial computers. It may also provide important new insights into the biological computers.

Finally, by combining CS theory with the theoretical tools of stochastic thermodynamics, we may uncover important new insights into the mathematical nature of all physical systems that perform computation in our universe.