The question of whether artificial beings or machines could become self-aware or conscious has been a philosophical question for centuries.

The main problem is that self-awareness cannot be observed from an outside perspective and the distinction of being really self-aware or merely a clever imitation cannot be answered without access to knowledge about the mechanism’s inner workings.

The article by Patrick Krauss and Andreas Maier investigates common machine learning approaches with respect to their potential ability to become self-aware. The authors realize that many important algorithmic steps toward machines with a core consciousness have already been taken.

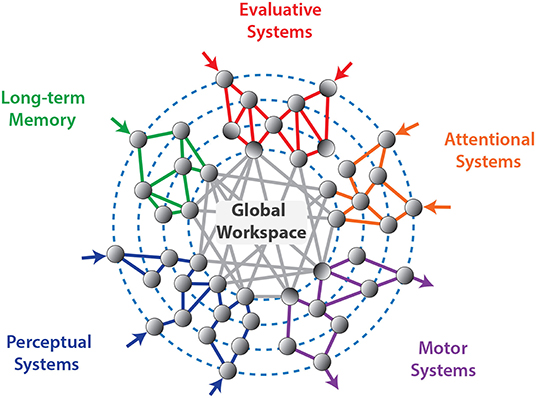

In the 1990s, Baars introduced the concept of a virtual “Global Workspace” that emerges by connecting different brain areas to describe consciousness. This idea was taken up and further developed by Dehaene. Today, besides the Integrated Information Theory, the Global Workspace Theory represents the second major theory of consciousness, being intensively discussed in the field of cognitive neuroscience.

Based on the implications of this theory, i.e., that “consciousness arises from specific types of information-processing computations, which are physically realized by the hardware of the brain”, Dehaene argues that a machine endowed with these processing abilities “would behave as though it were conscious; for instance, it would know that it is seeing something, would express confidence in it, would report it to others, could suffer hallucinations when its monitoring mechanisms break down, and may even experience the same perceptual illusions as humans”. Indeed, it has been demonstrated recently that artificial neural networks trained on image processing can be subject to the same visual illusions as humans.

The protoself processes emotions and sensory input unconsciously. Core consciousness arises from the protoself which allows to put the itself into relation. Projections of emotions give rise to higher-order feelings. With access to memory and extended functions such as language processing the extended consciousness emerges.

Damasio’s model of consciousness has as its main idea to relate consciousness to the ability to identify one’s self in the world and to be able to put the self in relation with the world. However, a formal definition is more complex and requires the introduction of several concepts first.

He introduces three levels of conscious processing:

1. The fundamental protoself does not possess the ability to recognize itself. It is a mere processing chain that reacts to inputs and stimuli like an automaton, completely non-conscious. As such any animal has a protoself according to this definition. However, also more advanced lifeforms including humans exhibit this kind of self.

2. A second stage of consciousness is the core consciousness. It is able to anticipate reactions in its environment and adapts to them. Furthermore, it is able to recognize itself and its parts in its own image of the world. This enables it to anticipate and to react to the world. However, core consciousness is also volatile and not able to persist for hours to form complex plans.

3. In contrast to many philosophical approaches, core consciousness does not require to represent representations of the world in words or language. In fact, Damasio believes that progress in understanding conscious processing has been impeded by dependence on words and language.

4. The extended consciousness enables human-like interaction with the world. It builds on top of core consciousness and enables further functions such as access to memory in order to create an autobiographic self. Also being able to process words and language falls into the category extended consciousness and can be interpreted as a form of serialization of conscious images and states.

In Damasio’s theory emotions and feelings are fundamental concepts. In particular Damasio differentiates emotions from feelings.

– Emotions are direct signals that indicate a positive or negative state of the (proto-)self.

– Feelings emerge only in conjunction with images of the world and can be interpreted as a second-order emotion that is derived from the world representation and future possible events in the world. Both are crucial for the emergence of consciousness.

In his theory, consciousness does not merely emerge from the ability to identify oneself in the world or an image of the world. For conscious processing, additionally feeling oneself in the sense of desiring to exist is required. Hence, he postulates a feeling, i.e., a derived second-order emotion, between the protoself and its internal representation of the world. Conscious beings as such want to identify oneself in the world and want to exist. From an evolutionary perspective as he argues, this is possibly a mechanism to enforce self-preservation.

In artificial intelligence (AI) numerous theories of consciousness exist. Implementations often focus on the Global Work Space Theory with only limited learning capabilities, i.e., most of the consciousness is hard-coded and not trainable. An exception is the theory by van Hateren which closely relates consciousness to simultaneous forward and backward processing in the brain. Yet, algorithms that were investigated so far made use of a global work space and mechanistic hard-coded models of consciousness. Following this line, research on minds and consciousness rather focuses on representation than on actual self-awareness.

Although representation will be important to create human-like minds and general intelligence, a key factor to become conscious is the ability to identify a self in one’s environment.

A major drawback of pure mechanistic methods, however, is that the complete knowledge on the model of consciousness is required in order to realize and implement them. As such, in order to develop these models to higher forms such as Damasio’s extended consciousness, a complete mechanistic model of the entire brain including all connections is required.

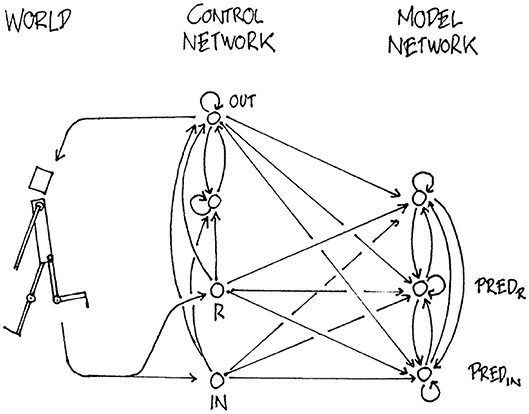

This model followed the idea of compressed neural representation.

Interestingly, compression is also key to inductive reasoning, i.e., learning from few examples which we typically deem as intelligent behavior.

The earliest work that the authors are aware of attempting to model and create agents that learn their own representation of the world entirely using machine learning date back to the early 1990’s. Already in 1990, Schmidhuber proposed a model for dynamic reinforcement learning in reactive environments and found evidence for self-awareness in 1991. The model follows the idea of a global work space. In particular, future rewards and inputs are predicted using a world model. Yet, Schmidhuber was missing a theory on how to analyse intelligence and consciousness in this approach.

Can Consciousness Emerge in Machine Learning Systems?

What I cannot create, I do not understand

Richard Feynman left these words on his blackboard in 1988 at the time of his death as a final message to the world.

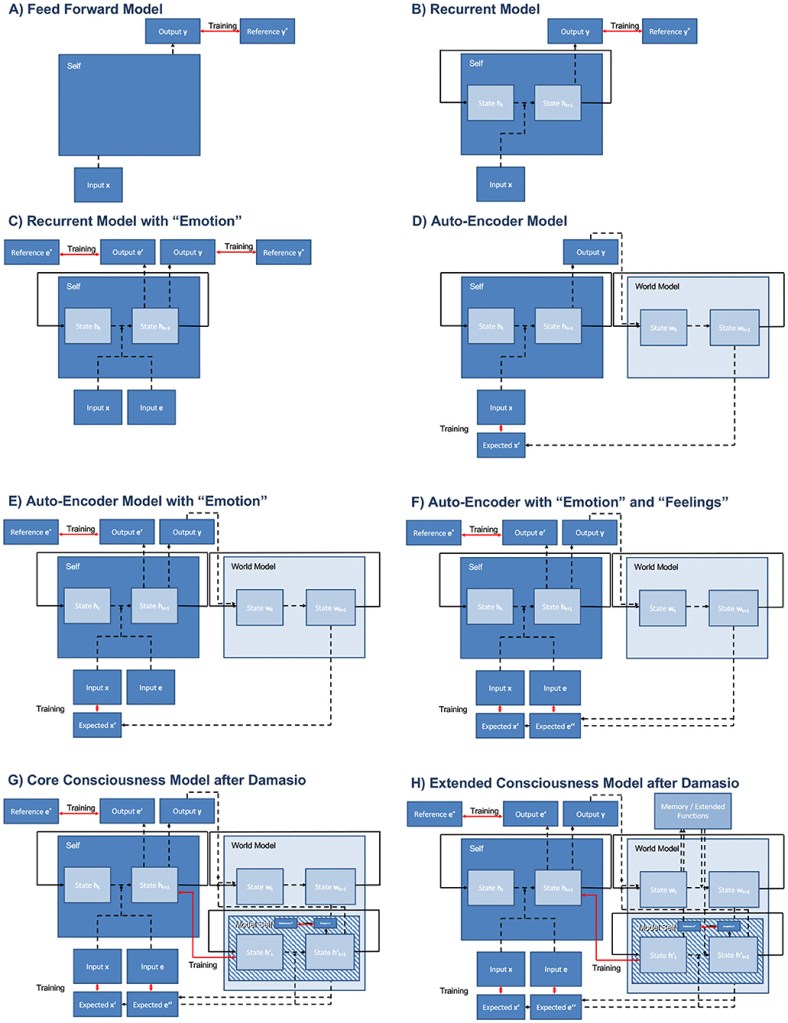

Following Figure provides an overview of important models depicted as box-and-arrow schemes, following the standard way to communicate neural network architectures within the machine learning community.

It denotes feed-forward connections as dashed black lines, recurrent connections as solid black lines, and training losses as red lines. Arrows indicate the direction of information flow.

The trainable feed-forward connections perform certain transformations on the input data yielding the output. In the most simple case, each depicted feed-forward connection could either be realized as a direct link with trainable weights from the source module to the respective target module, a so called perceptron, or with a single so called hidden layer between source and target module. Those multi-layer neural networks are known to be universal function approximators. Without loss of generality, the depicted feed-forward connections could also be implemented by other deep feed-forward architectures comprising several stacked hidden layers, and could thus be inherently complex.

While architectures (A–E) do not match theories of consciousness, architectures (F–H) implement theories by Schmidhuber and Damasio.

There are clearly theories that enable modeling and implementation of consciousness in the machine. On the one hand, they are mechanistic to the extend that they can be implemented in programming languages and require similar inputs as humans would do. On the other hand, even the simple models in above figure are already arbitrarily complex, as every dashed path in the models could be realized by a deep neural network comprising many different layers. As such also training will be hard. Interestingly, the models follow a bottom-up strategy such that training and development can be performed in analogy to biological development and evolution. The models can be trained and grown to more complex tasks gradually.

Interestingly, basic theories of consciousness can be implemented in computer programs. In particular, deep learning approaches are interesting as they offer the ability to train deep approximators that are not yet well-understood to construct mechanistic systems of complex neural and cognitive processes.

We reviewed several machine learning architectures and related them to theories of strong reductionism and found that there are neural network architectures from which base consciousness could emerge.

Yet, there is still a long way to form human-like extended consciousness.

I would like to add a set of quotes – to give some additional perspective:

“It is possible that we will have super intelligence in a few thousand days.”

— Sam Altman The Intelligence Age

or as Grady Booch puts it on X (Sept.23, 2024):

AI hype has no basis in reality and serves only to inflate valuations, inflame the public, garnet headlines, and distract from the real work going on in computing.