“Cognitive Biases in Fact-Checking and Their Countermeasures: A Review“

- We identify 39 cognitive biases that may compromise the fact-checking process.

- Through a systematic review, we highlight key categories of cognitive biases influencing human assessors.

- We propose a set of 11 countermeasures to mitigate the impact of cognitive biases on fact-checking activities.

- We describe the constituting elements of a bias-aware fact-checking pipeline.

Types of user tasks that may involve cognitive biases:

| Task | Description |

| Causal Attribution | Tasks involving an assessment of causality. |

| Decision | Tasks involving the selection of one over several alternative options. |

| Estimation | Tasks where people are asked to assess the value of a quantity. |

| Hypothesis Assessment | Tasks involving an investigation of whether one or more hypotheses are true or false. |

| Opinion Reporting | Tasks where people are asked to answer questions regarding their beliefs or opinions on political, moral, or social issues. |

| Recall | Tasks where people are asked to recall or recognize previous material. |

| Other | Tasks which are not included in one of the previous categories. |

Phenomena that affect human cognition;

| Flavor | Description |

| Association | Cognition is biased by associative connections between information items. |

| Baseline | Cognition is biased by comparison with (what is perceived as) a baseline. |

| Inertia | Cognition is biased by the prospect of changing the current state. |

| Outcome | Cognition is biased by how well something fits an expected or desired outcome. |

| Self-Perspective | Cognition is biased by a self-oriented viewpoint. |

Categorization of cognitive biases:

The task/flavor classification described in following table provides a structured and detailed approach to understanding the multifaceted ways in which cognitive biases can influence the fact-checking process.

By mapping each bias to specific fact-checking tasks and cognitive influences, we aim to offer a comprehensive framework that aids researchers and practitioners in identifying and addressing potential biases in their work.

| Association | Baseline | Inertia | Outcome | Self-Perspective | |

| Causal Attribution | – | – | – | B26. Hostile Attribution Bias B31. Just-World Hypothesis | B23. Fundamental Attribution Error B30. Ingroup Bias |

| Decision | B4. Authority Bias B5. Automation Bias B22. Framing Effect | – | – | – | – |

| Estimation | B7. Availability Heuristic B16. Conjunction Fallacy | B2. Anchoring Effect B11. Base Rate Fallacy B14. Compassion Fade B21. Dunning-Kruger Effect B35. Overconfidence Effect | B17. Conservatism Bias | B27. Illusion of Validity B34. Outcome Bias | B32. Optimism Bias B37. Salience Bias |

| Hypothesis Assessment | B6. Availability Cascade B29. Illusory Truth Effect | – | – | B10. Barnum Effect B12. Belief Bias B15. Confirmation Bias B28. Illusory Correlation | – |

| Opinion Reporting | – | B36. Proportionality Bias | B8. Backfire Effect | B9. Bandwagon Effect B38. Stereotypical Bias | B19. Courtesy Bias |

| Recall | B24. Google Effect B39. Telescoping Effect | – | B18. Consistency Bias | B13. Choice-Supportive Bias B20. Declinism B25. Hindsight Bias | – |

| Other | B3. Attentional Bias | – | – | B33. Ostrich Effect | – |

Note: The details on the biases presented in this table are discussed in the list of 39 cognitive biases selected in section 5 of the article, while a full list of the 221 cognitive biases considered is reported in Appendix B.

The literature allows us to specify 11 countermeasures that can be employed in a fact-checking context to help prevent manifesting the cognitive biases outlined in previous table.

| Task Phase | Countermeasure | Brief Description | Biases Involved |

| General Purpose | C7. Randomized or constrained experimental design | Employ a randomization process when pairing assessors and information items | Bias in General |

| General Purpose | C8. Redundancy and diversity | Use more than one assessor for each information item, and a variegated pool of assessors | Bias in General |

| General Purpose | C10. Time | Allocate an adequate amount of time for the assessors to perform the task | B2. Anchoring Effect B9. Bandwagon Effect |

| Pre-Task | C4. Engagement | Put the assessors in a good mood and keep them engaged | |

| Pre-Task | C5. Instructions | Prepare a clear set of instructions to the assessors before the task | B21. Dunning-Kruger Effect B35. Overconfidence Effect |

| Pre-Task | C11. Training | Train the Assessors before the task | Bias in General |

| During the Task | C1. Custom search engine | Deploy a custom search engine | Bias in General |

| During the Task | C2. Inform assessors | Inform the assessors about AI-based support systems | B5. Automation Bias |

| During the Task | C3. Discussion | Synchronous discussion between assessors | Bias in General |

| During the Task | C6. Require evidence | Ask the assessors to provide supporting evidence | B2. Anchoring Effect B8. Backfire Effect B11. Base Rate Fallacy B17. Conservatism Bias B22. Framing Effect B27. Illusion of Validity B28. Illusory Correlation |

| During the Task | C9. Revision | Ask the assessors to revise the assessments | B2. Anchoring Effect B3. Attentional Bias B7. Availability Heuristic B9. Bandwagon Effect B11. Base Rate Fallacy B22. Framing Effect B37. Salience Bias |

| Post-Task | C8. Redundancy and diversity | Aggregate the final scores | Bias in General |

It is important to note that our findings may not be generalizable to all possible fact-checking contexts or human populations, as cognitive biases may manifest differently depending on the specific context and individual differences.

Regarding the set of countermeasures presented, it should be noted that it is difficult to ascertain the extent to which the countermeasures are effective and general. Their effectiveness could be influenced by various factors, such as the specific context, individual differences, and the nature of the misinformation.

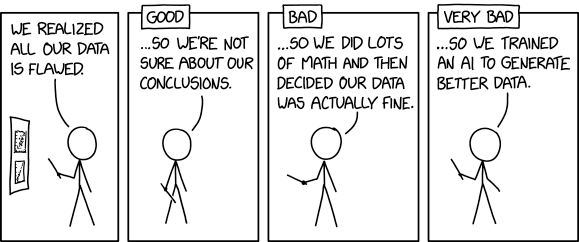

Some visuals to conclude 🙂