“Inferring to cooperate: Evolutionary games with Bayesian inferential strategies”

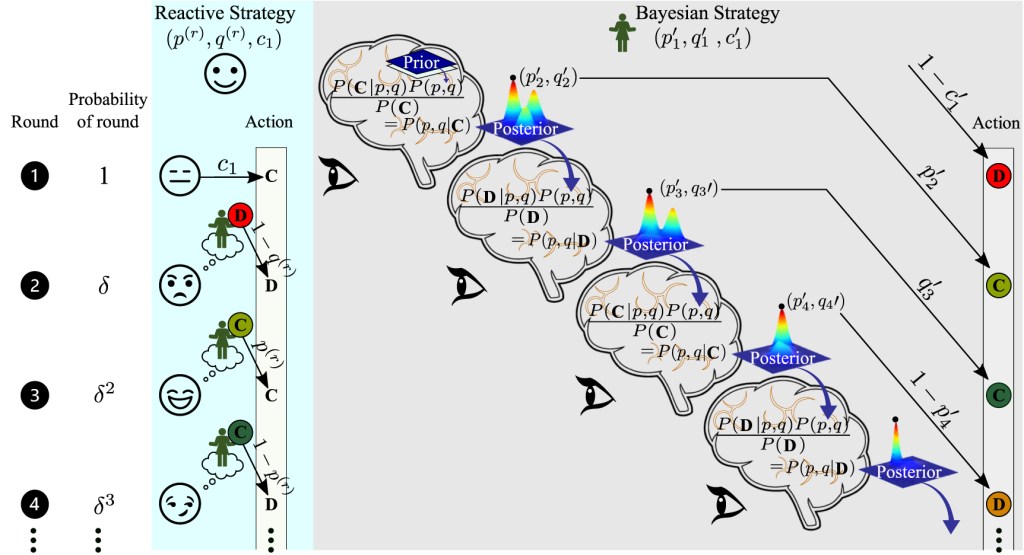

Strategies for sustaining cooperation and preventing exploitation by selfish agents in repeated games have mostly been restricted to Markovian strategies where the response of an agent depends on the actions in the previous round. Such strategies are characterized by lack of learning.

However, learning from accumulated evidence over time and using the evidence to dynamically update our response is a key feature of living organisms. Bayesian inference provides a framework for such evidence-based learning mechanisms. It is therefore imperative to understand how strategies based on Bayesian learning fare in repeated games with Markovian strategies.

Here, we consider a scenario where the Bayesian player uses the accumulated evidence of the opponent’s actions over several rounds to continuously update her belief about the reactive opponent’s strategy. The Bayesian player can then act on her inferred belief in different ways. By studying repeated Prisoner’s dilemma games with such Bayesian inferential strategies, both in infinite andfinite populations, we identify the conditions under which such strategies can be evolutionarily stable. We find that a Bayesian strategy that is less altruistic than the inferred belief about the opponent’s strategy can outperform a larger set of reactive strategies, whereas one that is more generous than the inferred belief is more successful when the benefit-to-cost ratio of mutual cooperation is high.

Our analysis reveals how learning the opponent’s strategy through Bayesian inference, as opposed to utility maximization, can be beneficial in the long run, in preventing exploitation and eventual invasion by reactive strategies.

The Bayesian framework developed in this work is the first step in incorporating sophisticated statistical learning mechanisms like Bayesian learning in altruistic decision-making.

Can a strategy that attempts to learn the fixed reactive strategy of the opponent prevent being out-competed by extremely selfish strategies like (p ∼ 0, q ∼ 0) in a repeated PD game? The answer depends critically on the

relative benefit of cooperation (r), the discount factor (δ) and on the nature of the strategy (BTFT, GBTFT, or NBTFT) employed by the Bayesian player to update her actions. For low r and δ, predominantly selfish strategies dominate over Bayesian learning strategies. But the situation changes with increase in the discount factor. As r increases, the Bayesian learning strategies are always effective at resisting invasion by selfish reactive strategies even for low discount factors.

Even though the Bayesian player may end up being more cooperative than extremely selfish strategies during the exploration phase of the game when she is trying to learn the strategy of her opponent, she avoids exploitation in the long run by gradually becoming more selfish through effective learning of her opponent’s strategy. In general, the success of a Bayesian player depends on the extent to which she can leverage the higher benefits of mutual cooperation against a cooperative opponent while avoiding being exploited by a more selfish opponent.

In order to better understand the key underlying causes behind altruistic behaviour in the natural world, it is important to take into account realistic ways in which animals learn and take decisions.

Decision making is often modulated by learning as well as cognitive constraints in factoring and processing a diverse range of stimuli from the environment.

Accounting for those constraints will enable us to build more realistic models for understanding altruistic behaviour in social groups.

The Bayesian framework developed in this work is the first step in incorporating sophisticated statistical learning mechanisms like Bayesian learning in altruistic decision-making. We hope to eventually address scenarios in which deviations from Bayesian inference, perhaps induced by cognitive constraints, can also affect patterns of altruistic behaviour in social groups. Such investigations will hopefully make it possible to design and implement protocols that encourage altruistic behaviour leading to greater benefits for society at large.